AWS Bedrock: Ultimate Guide to Transforming Your AI Journey

Introduction

Artificial intelligence is no longer just a buzzword. It’s transforming how businesses operate, innovate, and compete. But here’s the challenge: building AI applications from scratch is complex, expensive, and time consuming. That’s where AWS Bedrock comes in as a game changer.

AWS Bedrock is Amazon’s fully managed service that makes AI accessible to everyone. You don’t need a team of AI experts or massive infrastructure investments. Instead, you get access to powerful foundation models through a simple API. It’s like having an AI superpower without the superhero training.

If you’ve been curious about integrating AI into your applications, this guide is for you. We’ll explore what AWS Bedrock offers, how it works, and why businesses are choosing it over building custom solutions. Whether you’re a developer, business leader, or tech enthusiast, you’ll find valuable insights here.

By the end of this article, you’ll understand how AWS Bedrock can accelerate your AI initiatives. Let’s dive into what makes this service so compelling.

What Is AWS Bedrock?

AWS Bedrock is Amazon’s managed service for building and scaling generative AI applications. Think of it as your gateway to cutting edge AI models without the headaches of infrastructure management. Amazon launched this service to democratize access to artificial intelligence.

The service provides access to foundation models from leading AI companies. You can choose from models by Anthropic, AI21 Labs, Cohere, Meta, Stability AI, and Amazon itself. This variety gives you flexibility to pick the right model for your specific needs.

What makes AWS Bedrock special is its serverless architecture. You don’t provision servers or manage infrastructure. The service handles scaling automatically based on your usage. This means you focus on building applications, not managing systems.

Security and privacy are built into the core of AWS Bedrock. Your data stays within your AWS environment. Models don’t train on your prompts or responses. This privacy guarantee is crucial for enterprises handling sensitive information.

Integration with other AWS services is seamless. You can connect Bedrock to your existing AWS workflows effortlessly. This compatibility reduces friction when adopting AI capabilities into current systems.

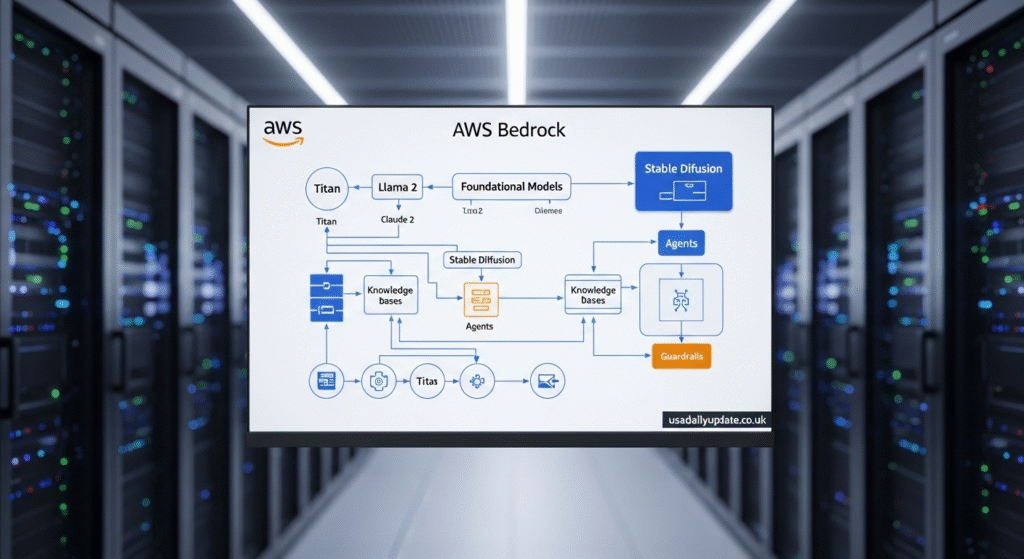

How AWS Bedrock Works

Understanding how AWS Bedrock functions helps you leverage it effectively. The architecture is designed for simplicity while maintaining powerful capabilities. Let me break down the key components that make it work.

At its core, AWS Bedrock uses APIs to connect your applications with foundation models. You send requests through these APIs. The service processes them using your chosen model. Then it returns responses to your application.

The process starts when you select a foundation model. Each model has different strengths and specializations. Some excel at text generation, others at conversation, and some at specific domains like coding or creative writing.

You customize model behavior using prompt engineering. This means crafting inputs that guide the model toward desired outputs. AWS Bedrock also supports fine tuning, letting you adapt models with your own data.

The service runs on AWS infrastructure globally. This distribution ensures low latency regardless of where your users are located. Amazon’s network handles the heavy computational lifting required for AI inference.

Pricing follows a pay as you go model. You’re charged based on input tokens processed and output tokens generated. There are no upfront commitments or minimum fees. This flexibility makes experimentation affordable.

Key Features of AWS Bedrock

AWS Bedrock packs numerous features that make AI development easier and more efficient. Understanding these capabilities helps you maximize the service’s potential. Let’s explore what sets it apart from other AI platforms.

Foundation Model Selection

You get access to multiple foundation models from various providers. This variety ensures you find the right fit for your use case. Anthropic’s Claude models excel at long form content and complex reasoning.

AI21 Labs offers Jurassic models optimized for text generation. Cohere provides models designed for enterprise applications. Meta’s Llama models bring open source flexibility. Stability AI delivers image generation capabilities.

Amazon’s Titan models are optimized for AWS integration. They offer competitive performance at attractive pricing. Having multiple options means you’re not locked into a single vendor or approach.

Customization and Fine Tuning

AWS Bedrock allows you to customize models without starting from scratch. You can fine tune foundation models using your proprietary data. This process adapts the model to your specific domain or industry.

The service provides tools for continued pre training. This lets you incorporate domain specific knowledge into models. The result is AI that understands your business context better.

You maintain full control over customized models. They remain private to your AWS account. Nobody else can access or use your customized versions.

Retrieval Augmented Generation

RAG is a powerful feature in AWS Bedrock. It connects foundation models to your data sources. This combination lets models answer questions using your specific information.

The technology works by retrieving relevant documents first. Then it provides that context to the model. The model generates responses based on your actual data rather than just its training.

This approach reduces hallucinations and improves accuracy. Your AI applications provide answers grounded in real information. For enterprises, this feature is invaluable for knowledge management and customer support.

Agents for Bedrock

Agents extend what foundation models can do. They let AI applications take actions, not just generate text. You can build agents that complete multi step tasks autonomously.

These agents can call APIs, query databases, and interact with other services. They break down complex requests into manageable steps. Then they execute those steps to achieve the desired outcome.

Building agents used to require extensive coding and orchestration logic. AWS Bedrock simplifies this with pre built components. You define the agent’s capabilities, and Bedrock handles the execution.

Security and Compliance

Security features are comprehensive in AWS Bedrock. Data encryption happens both in transit and at rest. You control access using AWS Identity and Access Management policies.

The service complies with major regulatory frameworks. This includes GDPR, HIPAA, and SOC compliance. For regulated industries, these certifications are essential for adoption.

AWS Bedrock doesn’t use your data to train models. This guarantee protects your intellectual property and customer information. Your prompts and outputs remain confidential to your account.

Use Cases for AWS Bedrock

AWS Bedrock enables countless applications across industries. Understanding practical use cases helps you identify opportunities. Let’s explore how organizations are leveraging this technology.

Content Creation and Marketing

Marketing teams use AWS Bedrock to generate content at scale. Blog posts, product descriptions, and social media content can be created efficiently. The AI maintains brand voice while producing diverse content.

You can automate email campaigns with personalized messaging. The models adapt content based on customer segments. This personalization increases engagement without manual effort.

Creative teams use it for brainstorming and ideation. Generate multiple concepts quickly, then refine the best ones. This accelerates the creative process without replacing human judgment.

Customer Service and Support

Chatbots powered by AWS Bedrock provide intelligent customer support. They understand context and provide relevant answers. These bots handle routine inquiries, freeing human agents for complex issues.

The technology enables 24/7 support without staffing costs. Customers get immediate responses regardless of time zones. Satisfaction improves when questions are answered instantly.

You can integrate knowledge bases for accurate information retrieval. The RAG feature ensures responses are based on your documentation. This reduces errors and maintains consistency across interactions.

Code Generation and Development

Developers use AWS Bedrock to accelerate coding tasks. Generate boilerplate code, write functions, and debug issues. The AI understands multiple programming languages and frameworks.

Code review and documentation become faster. Models can explain complex code sections in plain language. They can also suggest optimizations and identify potential bugs.

This doesn’t replace developers but augments their capabilities. Routine tasks are automated, letting developers focus on architecture and problem solving. Productivity increases without sacrificing quality.

Data Analysis and Insights

Business analysts leverage AWS Bedrock for data interpretation. The AI can analyze datasets and identify patterns. It generates insights in natural language that non technical stakeholders understand.

Report generation becomes automated. Feed data to the model and receive comprehensive analysis. This saves hours of manual report writing.

Predictive analytics applications benefit from foundation models. Combine traditional analytics with AI generated insights. The result is more comprehensive business intelligence.

Document Processing and Summarization

Organizations process massive amounts of documents daily. AWS Bedrock can summarize lengthy reports into key points. This helps executives digest information quickly.

Legal and compliance teams use it to review contracts. The AI identifies important clauses and potential issues. This speeds up contract analysis significantly.

Research becomes more efficient when AI can summarize academic papers. Students and researchers extract relevant information faster. Literature reviews that took weeks now take days.

Benefits of Using AWS Bedrock

Choosing AWS Bedrock offers numerous advantages over building custom AI solutions. These benefits explain why businesses are rapidly adopting the service. Let’s examine what you gain by using this platform.

Reduced Time to Market

Building AI applications from scratch takes months or years. With AWS Bedrock, you can deploy in weeks or days. Pre trained models eliminate the lengthy training phase.

You bypass the need to collect massive training datasets. Foundation models already understand language, code, and various domains. You simply adapt them to your specific needs.

This speed advantage is crucial in competitive markets. Being first with AI enhanced products creates significant advantages. AWS Bedrock lets you move fast without sacrificing quality.

Cost Effectiveness

Training foundation models costs millions of dollars. Infrastructure requirements are astronomical for most organizations. AWS Bedrock eliminates these capital expenses entirely.

You pay only for what you use with no upfront costs. This makes AI accessible to startups and small businesses. Experimentation becomes affordable when you’re not committed to expensive infrastructure.

The serverless model means no wasted capacity. Resources scale automatically with demand. During quiet periods, you’re not paying for idle servers.

Access to Cutting Edge Technology

AWS Bedrock provides access to the latest AI models. As providers update their models, you benefit automatically. You don’t need to retrain or redeploy to get improvements.

Multiple model providers mean you’re not dependent on a single technology. If a better model emerges, you can switch easily. This flexibility protects your investment in AI.

Amazon continuously adds features and capabilities. The service evolves with the AI landscape. Your applications stay current without constant rebuilding.

Simplified Operations

Managing AI infrastructure is complex and resource intensive. AWS Bedrock handles all operational aspects. Your team focuses on application logic, not infrastructure management.

Scaling happens automatically without configuration. Whether you have ten users or ten million, the service adapts. This reliability is built into the platform.

Monitoring and observability are integrated with AWS CloudWatch. You track usage, performance, and costs easily. Troubleshooting is straightforward with comprehensive logging.

Enterprise Grade Security

Security isn’t an afterthought with AWS Bedrock. It’s architected into every layer of the service. Your data remains within your AWS environment under your control.

Compliance certifications reduce regulatory burden. You inherit AWS’s compliance posture for major frameworks. This accelerates adoption in regulated industries.

Network isolation and encryption protect sensitive data. Access controls are granular and flexible. You define exactly who can use which models for what purposes.

Getting Started with AWS Bedrock

Ready to try AWS Bedrock? Getting started is straightforward. You don’t need specialized AI expertise to begin. Let me walk you through the initial steps.

First, you need an AWS account. If you don’t have one, creating it takes just minutes. AWS offers free tier benefits for many services, though Bedrock pricing is pay as you go.

Navigate to the AWS Bedrock console through the AWS Management Console. The interface is intuitive and guides you through setup. You’ll need to enable model access for the foundation models you want to use.

Model access requires accepting provider terms and conditions. Some models are available immediately. Others may require approval, especially for certain use cases. This process typically completes within minutes to hours.

Once enabled, you can start experimenting through the console. The playground environment lets you test models without writing code. Try different prompts and see how models respond.

For production use, you’ll integrate via API. AWS provides SDKs for popular programming languages. Documentation includes code examples to accelerate development.

Choosing the Right Model

Selecting the appropriate foundation model is crucial. Different models excel at different tasks. Consider your specific requirements before deciding.

For general text generation, models like Claude or Jurassic work well. They handle diverse content types effectively. Their broad training makes them versatile.

Conversational applications benefit from models optimized for dialogue. Claude excels at maintaining context across long conversations. This makes it ideal for chatbots and virtual assistants.

Code generation requires models trained on programming languages. Claude and certain Amazon Titan models handle code well. They understand syntax and can debug effectively.

Cost is another consideration. Models vary in pricing. Some are more economical for high volume applications. Others provide better quality at higher costs.

Best Practices for Implementation

Start with clear objectives for your AI application. Knowing what you want to achieve guides model selection and prompt design. Vague goals lead to disappointing results.

Invest time in prompt engineering. Well crafted prompts dramatically improve output quality. Experiment with different phrasings and structures.

Implement error handling and fallback mechanisms. AI models sometimes produce unexpected outputs. Your application should handle these gracefully.

Monitor costs closely, especially during development. It’s easy to underestimate usage during testing. Set up billing alerts to avoid surprises.

Test thoroughly with real world data. Synthetic test cases may not reveal edge cases. Real user inputs often behave differently than you expect.

AWS Bedrock Pricing

Understanding AWS Bedrock pricing helps you budget effectively. The cost structure is transparent and usage based. Let’s break down how you’re charged for using the service.

Pricing follows a token based model. Tokens are pieces of text, roughly equivalent to words or word fragments. You’re charged for both input tokens you send and output tokens the model generates.

Different models have different pricing. Generally, more capable models cost more per token. Specialized models may have premium pricing.

For example, Claude models from Anthropic have specific per token rates. Amazon Titan models typically offer competitive pricing. You can find exact pricing on the AWS Bedrock pricing page.

There are no minimum fees or long term commitments. You can start small and scale as needed. This flexibility is perfect for testing before committing to larger deployments.

Fine tuned models incur additional costs. You pay for the compute time during training. Then you pay per token for inference with your customized model.

Storage costs apply if you use RAG features. Storing documents in knowledge bases incurs S3 storage fees. These costs are typically minimal compared to inference costs.

Cost Optimization Strategies

You can reduce costs through several strategies. Caching responses for common queries eliminates redundant processing. This works well for FAQs or repetitive content.

Choosing the right model for your use case matters. Don’t use expensive models when simpler ones suffice. Match model capability to task complexity.

Optimizing prompts reduces token usage. Shorter, more precise prompts use fewer input tokens. Requesting concise outputs reduces output tokens.

Batching requests can improve efficiency. Instead of processing one at a time, group similar requests. This reduces overhead and may lower costs.

Monitoring usage patterns helps identify waste. AWS Cost Explorer shows where money goes. Regular reviews reveal optimization opportunities.

Comparing AWS Bedrock to Alternatives

AWS Bedrock isn’t the only AI service available. Understanding how it compares to alternatives helps you make informed decisions. Let’s look at other options and how they stack up.

Azure OpenAI Service

Microsoft’s Azure OpenAI Service provides access to OpenAI models. It offers GPT-4 and other OpenAI technologies. The service is similar in concept to AWS Bedrock.

Azure OpenAI excels if you’re already in the Microsoft ecosystem. Integration with Azure services is seamless. For Microsoft-centric organizations, this is a natural choice.

AWS Bedrock offers more model variety. You’re not limited to OpenAI models. This flexibility can be advantageous for specific use cases.

Google Cloud Vertex AI

Google’s Vertex AI provides AI model access and development tools. It includes PaLM and other Google models. The platform is comprehensive for AI development.

Vertex AI offers strong integration with Google Cloud services. If you use BigQuery or other Google tools, integration is smooth. Google’s AI research expertise shows in model quality.

AWS Bedrock’s multi-provider approach offers more options. You can compare models easily without leaving the platform. This vendor diversity reduces lock-in risks.

Building Custom Models

Some organizations consider building their own models. This provides maximum control and customization. However, the costs and complexity are substantial.

Building from scratch requires massive datasets. You need significant computational resources. Teams of specialized AI researchers are necessary.

AWS Bedrock eliminates these requirements. You get enterprise quality AI without the investment. For most organizations, this is the practical choice.

Open Source Solutions

Open source models like Llama are available for self hosting. This approach offers flexibility and no per token costs. However, you manage all infrastructure yourself.

Self hosting requires DevOps expertise. You handle scaling, security, and updates. The operational burden can be significant.

AWS Bedrock simplifies operations while offering some open source models. You get the benefits without the operational complexity. This middle ground appeals to many organizations.

Security and Compliance Considerations

Security is paramount when implementing AI solutions. AWS Bedrock addresses these concerns comprehensively. Let’s explore the security features and compliance aspects.

Data Protection

Your data never leaves your AWS environment. Prompts and responses stay within your account. Models process data without storing it.

Encryption protects data in transit using TLS. Data at rest is encrypted using AWS Key Management Service. You control encryption keys for maximum security.

AWS Bedrock doesn’t use your data for model training. This guarantee is crucial for proprietary information. Your intellectual property remains protected.

Access Control

AWS Identity and Access Management controls who can use Bedrock. You define granular permissions for users and applications. Role based access ensures appropriate authorization.

Service control policies let you restrict usage across accounts. This is useful for large organizations with multiple teams. Central governance maintains security standards.

API calls are logged in AWS CloudTrail. This provides audit trails for compliance. You can track who accessed what models when.

Compliance Certifications

AWS Bedrock complies with major regulatory frameworks. GDPR compliance addresses European data protection requirements. HIPAA eligibility supports healthcare applications.

SOC 2 certification validates security controls. This is important for enterprise procurement. ISO certifications demonstrate international standards compliance.

Regular third party audits verify security practices. AWS publishes compliance reports for customer review. This transparency builds trust.

Network Security

Virtual Private Cloud endpoints keep traffic private. Data doesn’t traverse the public internet. This reduces exposure to threats.

You can implement network access controls. Restrict which networks can reach Bedrock APIs. This adds another security layer.

DDoS protection is built into AWS infrastructure. Your applications remain available during attacks. Amazon’s scale provides robust protection.

Future of AWS Bedrock

AWS Bedrock continues evolving rapidly. Amazon invests heavily in expanding capabilities. Understanding the roadmap helps you plan strategically.

Amazon regularly adds new foundation models. As AI companies release better models, they become available on Bedrock. You benefit from continuous improvement without migration.

Multi modal capabilities are expanding. Models that understand images, audio, and video are coming. This enables richer applications beyond text.

Performance improvements happen continuously. Models become faster and more accurate. AWS optimizes infrastructure for better efficiency.

Pricing often becomes more competitive over time. As the service matures and scales, costs typically decrease. Early adopters benefit from ongoing price reductions.

Integration with other AWS services deepens. Expect tighter connections to databases, analytics, and IoT. This makes building comprehensive solutions easier.

Conclusion

AWS Bedrock represents a significant leap forward in AI accessibility. You can now build sophisticated AI applications without massive investments. The combination of powerful models, serverless architecture, and enterprise security makes it compelling.

Whether you’re enhancing customer experiences, automating workflows, or generating content, AWS Bedrock enables it. The service removes traditional barriers to AI adoption. You focus on creating value, not managing infrastructure.

As AI becomes essential for competitive advantage, AWS Bedrock provides a practical path forward. Its flexibility lets you start small and scale as you learn. The multi-provider approach protects against vendor lock-in.

Are you ready to explore how AWS Bedrock can transform your applications? Start experimenting today and discover what’s possible. The future of AI is here, and it’s more accessible than ever.

Frequently Asked Questions

What is AWS Bedrock used for? AWS Bedrock is used for building and deploying generative AI applications. It provides access to foundation models through APIs for tasks like content generation, chatbots, code assistance, data analysis, and document processing. Organizations use it to add AI capabilities without building models from scratch.

How much does AWS Bedrock cost? AWS Bedrock uses pay as you go pricing based on tokens processed. You pay for input tokens sent and output tokens generated. Pricing varies by model, with no upfront costs or minimum commitments. Fine tuning and knowledge base storage incur additional charges.

Is AWS Bedrock better than OpenAI? AWS Bedrock and OpenAI serve different purposes. Bedrock provides access to multiple model providers including Anthropic, Cohere, and Amazon, while OpenAI offers its own models. Bedrock excels in AWS integration and enterprise security, while OpenAI provides direct access to GPT models.

What models are available on AWS Bedrock? AWS Bedrock offers models from Anthropic (Claude), AI21 Labs (Jurassic), Cohere, Meta (Llama), Stability AI, and Amazon (Titan). Each provider offers different model sizes and capabilities optimized for various use cases like text generation, conversation, or code.

Can I fine tune models on AWS Bedrock? Yes, AWS Bedrock supports fine tuning foundation models with your own data. You can customize models to your specific domain or use case while maintaining privacy. The service provides tools for continued pre training and adaptation without requiring AI expertise.

Is AWS Bedrock secure for enterprise use? Yes, AWS Bedrock provides enterprise grade security. Data is encrypted in transit and at rest, stays within your AWS account, and isn’t used for model training. The service complies with major frameworks like GDPR, HIPAA, and SOC 2.

What is the difference between AWS SageMaker and Bedrock? AWS SageMaker is for building, training, and deploying custom machine learning models. AWS Bedrock provides ready to use foundation models through APIs. SageMaker requires more ML expertise but offers more customization, while Bedrock prioritizes ease of use and quick deployment.

Does AWS Bedrock work offline? No, AWS Bedrock is a cloud based service requiring internet connectivity. All processing happens on AWS infrastructure. For offline AI applications, you would need to deploy models locally using different approaches like self hosted open source models.

Can I use AWS Bedrock with other cloud providers? AWS Bedrock APIs can be called from applications running anywhere with internet access. However, you need an AWS account and the service runs on AWS infrastructure. You can integrate Bedrock with multi cloud architectures through API calls.

How do I get started with AWS Bedrock? Start by creating an AWS account and accessing the Bedrock console. Enable model access for the foundation models you want to use. Experiment in the playground, then integrate via APIs using AWS SDKs. Documentation provides code examples to accelerate development.

Also Read Usadailyupdate.co.uk